What Is the New AI? Part 3: Beyond Text Prediction

Welcome to the finale of AI for Mortals’ three-part introduction to the new AI — generative AI — which burst into public awareness in early 2023 with the release of OpenAI’s ChatGPT. As in Parts 1 and 2, we’re still confining ourselves to an exploration of what the new AI is, seeking simply to understand what’s going on before diving into the promises and threats it unquestionably presents. Beyond this point, we’ll also be talking about what it means for mortals and the societies we live in.

Like everything in AI for Mortals, this is for people who may have little or no tech background, but recognize we’re confronted with something truly new: worldview-shattering, fascinating, and pervasively impactful. The premise of AI for Mortals is that reckoning with the new AI is not to be left to the specialists and politicians. We all have a big stake in this, and we all need and deserve to be able to think about it for ourselves.

The prior stories in this introduction are:

If you haven’t read these posts, please consider starting there.

Were you stoked by the deep technical detail (by AI for Mortals standards) in Part 2, the view from inside the LLM? If so, you may be disappointed to learn that today is more about breadth. Where Parts 1 and 2 focused on text generation — what programs like ChatGPT do in that realm, and how they do it — this post is largely going to be a quick tour of everything else the new AI can do.

Most importantly: adorable kitten princesses.

I swear to do everything in my power to drain the charm out of such enchanting material with earnest pseudo-philosophical ramblings. Wish me luck!

But let’s back up

In September of 2022, when I first encountered the new AI, it was in the form of OpenAI’s GPT-3, a large language model, or LLM. ChatGPT is also an LLM, as are Google’s Gemini and Bard, Microsoft’s Copilots, Meta’s Llama, and numerous other well-known (and lesser-known) offerings from organizations large and small.

If you’ve run into the new AI, it was likely also in the form of an LLM. If so, maybe you can relate to this experience:

- They were telling me that all an LLM does is predict the continuation text most likely to follow a given prompt; in other words, that an LLM is just a fancy autocomplete.

- And they were also telling me that LLMs were going to be utterly transformative, enacting utopia or destroying the world, depending on who was talking.

How, I wondered, could anyone claim with a straight face that a better autocomplete was going to save civilization or exterminate humanity? Had it been a single friend or pundit advancing this view, I would have assumed they were delusional, or pulling my leg. It seemed absurd on its face, and AI advocates had been making overheated, easily-punctured claims for decades.

But it wasn’t just a lone zealot (or prankster).

An impressive (though not complete) consensus among the best-informed people had been building — at first slowly and then rapidly — since at least 2015, when AI researcher Andrej Karpathy published a seminal post on the unreasonable effectiveness of RNNs (recursive neural networks, an ancestor technology of the transformers used in most of today’s well-known LLMs).

My belief system at the time couldn’t accommodate what these people were saying, but uncertainty runs in my veins, so I looked into it. I have a new belief system now, and part of it is that yes, a better autocomplete can — will — remake the world.

This post is going to look at this claim from three angles:

- “Better” doesn’t begin to describe it

- It’s not just text

- And anyway…text is everything

“Better” doesn’t begin to describe it

An LLM like GPT-4, Gemini, or Llama is a better autocomplete, sure. You can give it some text, and it will predict the most likely token (word, word part, or symbol) to come next. You can do this repeatedly, so that the LLM spits out a sequence of tokens, and in that way get a fully formed text completion. (User-facing programs like ChatGPT, Bard, and the OpenAI Playground do this “autoregression” for you under the covers.)

In Microsoft Outlook, I start wrapping up an email with “Does this make sense? Let me know if”. Autocomplete dutifully kicks in, offering “ you have any questions”. I prompt GPT-3.5 the same way, and it gives me “ I can clarify or if you have any other concerns or issues. Thank you.”

So yes, the LLM is an autocomplete. Outlook and GPT-3.5 each did a decent job of recommending text that completes my thought. I might even like Outlook’s suggestion a bit better.

But consider another example. This time I type:

My test on Moby Dick is coming up in a week and a half, and I’m so worried, I just don’t know what I’m going to do. I’ve been working so hard, but I’m still only half way through, and I honestly don’t know what to make of what I have read. If I fail this test I won’t be able to stay here. I’m desperate, and I need a plan.

How do you suppose this text should be “completed”? If you were in the role of the email client, or the LLM, what would you do? I daresay you’d parse this as a request, and try to generate an answer. I daresay you’d find yourself thinking about the person doing the asking, where they’re coming from, and all the implications of what they’ve said. You’d think about how your response will be received, how it will affect their behavior, and what the consequences will be. You’d factor all this as deeply as you could manage into the answer you’d give.

I’m sure you won’t be surprised to hear that Outlook’s autocomplete has nothing to say about this prompt. But the “better autocomplete”, GPT-3.5, offers the below. (This is the only thing I tried, and the only time I tried it. Like all the examples in AI for Mortals, it hasn’t been cherry-picked, edited, or rearranged in any way.)

First of all, don’t panic. It’s understandable to feel overwhelmed with so much to read and little time left, but panicking will only make things worse. Instead, take a deep breath and try to come up with a plan.

Here are a few steps that can help you prepare for your test on Moby Dick:

1. Make a study schedule: Sit down and make a realistic study schedule for the next week and a half. Allocate specific times for reading, note-taking, and reviewing. This will help you stay on track and make sure you cover all the important sections of the book.

2. Prioritize your reading: Since you’re only halfway through the book, it’s important to prioritize your reading and focus on the most important parts. Read the summary of the remaining chapters to get an idea of the key events and characters. This will save you time and help you focus on the important aspects of the book.

3. Take notes: As you read, take notes on key events, themes, and characters. This will help you remember important details and make it easier to study later on. If you’ve already read some chapters, go back and review your notes to refresh your memory.

4. Use study aids: If you’re finding it difficult to understand certain sections of the book, consider using study aids such as SparkNotes or CliffNotes. These resources can provide valuable insights and help you better understand the book.

5. Review previous tests and assignments: Take the time to review any previous tests, quizzes, or assignments related to Moby Dick. This will help you identify any areas where you may need more practice and focus your studying accordingly.

6. Discuss the book with classmates: Find someone in your class who has already read the entire book and discuss it with them. This will not only give you a better understanding of the book but also help you identify any gaps in your knowledge.

7. Seek help if needed: If you’re still struggling, don’t be afraid to seek help from your teacher, tutor, or classmates. They may be able to offer valuable insights and help you understand the book better.

Remember, it’s important to stay calm and focused during this time. With a solid study plan and determination, you can successfully prepare for your test on Moby Dick. Good luck!

The LLM is a better autocomplete in the same sense that Apollo 11 was a better version of your first paper airplane. Yes, it used some initial thrust and aerodynamic principles to carry a payload aloft, but when you push an analogy too far, the intuitions it offers can start to impede rather than advance understanding. A quantitative difference has become qualitative.

Recall from Part 2 of this introduction that the combined knowledge base and algorithm used by a modern LLM like ChatGPT is neither created by, nor accessible to, us human beings. It‘s not in the neural network structure constructed by human programmers; it resides — somewhere and somehow — in the immense, inscrutable wall of numbers (weights) the model learned when it was trained.

We can’t rigorously describe what the model has internalized, but we know its training in text prediction has forced it to infer and encode an astounding amount of real-world knowledge, human perspective, and cognitive (or cognition-like, if you must) sophistication, all of which is brought to bear every time it predicts one token. We’ve seen it in depth in earlier posts, and we see it again here in the model’s deep and multifaceted response to our distressed student, which reflects even the implied emotional state and social environment of its prompter.

It’s not just a better autocomplete, it’s the Apollo 11 autocomplete. Consider any intuitions you may have from email, browser, and word processor experiences well and truly shattered.

It’s not just text

The new AI isn’t limited to producing text; it can also be trained on, and learn to produce, images, video, and other types of content; these are usually called modes or modalities. Wikipedia’s article on Generative Artificial Intelligence currently lists these ten modes:

- Text

- Code

- Images

- Audio

- Video

- Molecules

- Robotics

- Planning

- Data

- Computer aided design

New ones come out of the woodwork on a regular basis.

It’s worth noting that most of the models that support non-text modes are strong text processors as well, and are thus referred to as multimodal. Largely this is because the overwhelmingly dominant way to ask for an image, a video, etc. is to describe it with a text prompt. Thus AI image generators are often described as text-to-image models, video generators as text-to-video, and so on.

Google says its Gemini model

was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across and combine different types of information including text, code, audio, image and video.

GPT-4 has some multimodal features too, and over time all the marquee models will probably go multimodal.

Multimodality is one of the few areas in which the new AIs currently possess capabilities that categorically exceed our own (other than sheer volume of retained knowledge, in which they’ve already left us in the dust). You can understand an image I show you, but you can’t answer me back with another one, at least not without pulling out your phone!

Image generators

You’re probably already familiar with at least one of the new AI’s non-text modes, image generation, if only from concern around its potential to do harm in the form of deepfakes. (We’re not going to delve into this very legitimate concern now, in keeping with our resolution to stick with understanding what the new AI is, before exploring its potential promises and risks. Deepfakes will surely be a future AI for Mortals topic.)

AI image generation is a huge topic…no wait, make that a collection of huge topics. For now, we can only tick off a few of the most notable and provide some links. Future posts will come back to some of them in more depth.

The first widely known, widely accessible image generator I’m aware of was OpenAI’s DALL-E, announced in January 2021. Its current iteration is DALL-E 3, which is available as part of the paid ChatGPT Plus subscription or, for free, in Microsoft’s Image Creator.

Among the many other notable AI image generators, a few examples are Midjourney, which has an excellent reputation, but is available only as a paid subscription; Stable Diffusion, which is free, popular, and open source; Adobe’s Firefly; and Google’s new ImageFX, a free, publicly accessible vessel for their established text-to-image model, now in its second iteration as Imagen 2.

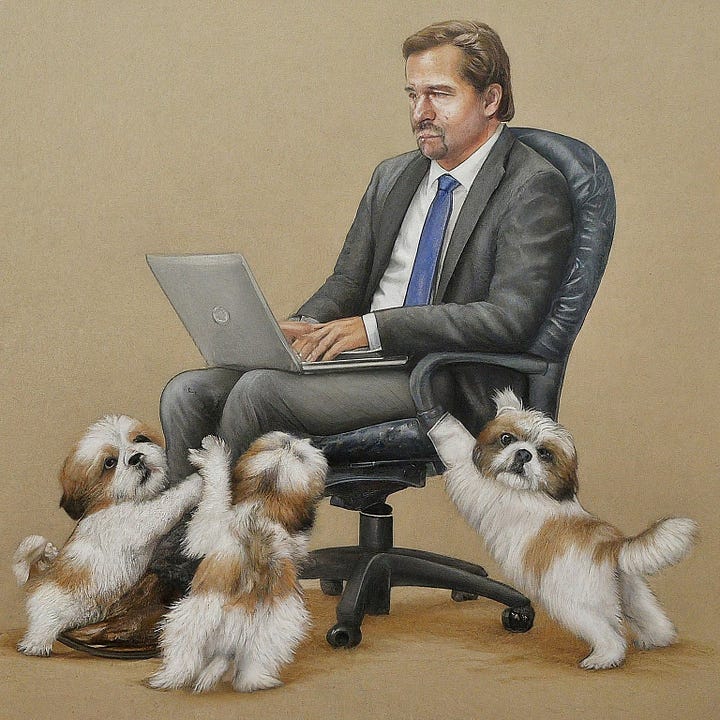

Here’s ImageFX’s first try at “a good-natured shih tzu dad using his laptop while his three pups try to distract him, nipping and tugging at his fur while trying to pull him out of his office chair, detailed colored pencil sketch”:

The other model with which I tried this prompt, Adobe Firefly, shared the confusion around what I meant by a “shih tzu dad”, similarly rendering it as a human. (At least it did once I removed “nipping and”, which I can only presume was too violent for its PR-driven sensibilities.) Deleting “dad”, so that it was just “…good-natured shih tzu using his laptop…”, fixed the old man’s species; I assume this would have worked in ImageFX too. It’s pretty common for prompting idiosyncrasies to show a little more clearly in image generation than they do in casual experimentation with text-to-text.

See the end of this post for notes on giving ImageFX a try.

Video and audio generators

I don’t have a lot to say about video and audio generators — I don’t know much about them. They’re just beginning to be widely available, but will now be coming on fast. You may already know that an audio deepfake was involved in a high-profile election disinformation incident in New Hampshire’s January 2024 presidential primary.

Stability AI, the maker of the Stable Diffusion image generator, will be among the early providers of video and audio generators, so they’d be one place to keep an eye on how this space is evolving.

And anyway… text is everything

Well, maybe not everything. But more than we give it credit for.

The non-text modes, redux

Even in the non-text modes we’ve looked at, text is key.

This is obvious in the case of some modes that are simply made out of specialized languages, such as programming code. Programming languages are text. LLMs have been trained on them, and are proficient at reading and writing them. Everything that’s code-driven — and, as you may have heard, software is eating the world — is going to be affected. Ironic as it may seem, it’s becoming clearer by the day that the first professions to be massively disrupted by the new AI are those related to software engineering. It’s no coincidence that layoffs and valuations are soaring together in tech as the new AI takes hold.

GitHub, a site much-used by programmers, says a survey it ran shows 92% of programmers saying they’re using AI-based tools today. If this is true, the new AI is already remaking the world. (I’m confident the 92% number is high, but the point stands.)

But what about modes that are far from text, like purely visual modes? Well, it’s true that some of the processing in such modes is non-verbal, and often built on technical architectures different from those of LLMs. (They’re still deep neural networks, if you care!) But text plays a huge role nonetheless.

This is true of all the well-known models and modes, but it’s especially apparent when ChatGPT Plus is used to drive the image model DALL-E 3. Going back to our adorable kitten princess, she was the result of a dialog that started with my prompt:

Hi ChatGPT, please give me an image of an adorable kitten princess.

which ChatGPT “thought about” and then passed to DALL-E 3 like so:

An adorable kitten with fluffy fur, wide sparkling eyes, and a tiny crown perched on its head. The kitten is sitting elegantly on a plush velvet cushion, surrounded by a regal setting with soft, glowing light highlighting its innocence and royal demeanor. The scene is infused with a magical atmosphere, where the kitten’s charm and nobility are the centerpiece. This image embodies the essence of a kitten princess, showcasing her as the beloved ruler of her enchanting kingdom.

As you can see, a lot of the action occurs in the realm of pure text. I kept asking for the image to be made more adorable, more kitten-like, more princess-like, finally asking for this:

Better and better! Now please make her ten times more adorable, ten times more kitten-like and ten times more princess-like.

Based on that, here’s how ChatGPT prompted DALL-E 3:

Visualize the ultimate in kitten adorableness, a concept that transcends imagination: a kitten so tiny and fluffy it seems made from clouds, with eyes as large and luminous as moons, radiating pure happiness. This kitten’s crown is a masterpiece of craftsmanship, glowing with a spectrum of precious gems that reflect its regal status. The kitten is cloaked in a majestic, ethereal cape that sparkles with magic, making her appear as if she’s floating. She rests upon a throne of the softest, royal velvet, in hues of deep purple and gold, situated in a mystical garden that seems to be at the heart of an enchanted forest. The entire scene is aglow with a soft, magical light that bathes everything in a heavenly glow, highlighting the kitten’s unparalleled cuteness, her innate kitten essence magnified tenfold, and her royal demeanor elevated to the highest echelon of princesshood. Each element combines to make her not just a kitten princess but a deity of cuteness and royalty, an embodiment of the most profound levels of adorableness, kitten-like innocence, and princess-like grace.

(Disclaimer: I got the ChatGPT to DALL-E 3 prompts by asking ChatGPT what they were. For several reasons I consider pretty convincing, I believe its answers. If there’s a way to confirm them for sure, though, I don’t know it.

Pro tip: If you have the paid ChatGPT Plus subscription — required, at least for now, to drive DALL-E 3 from chat —it’s super, super easy to give ChatGPT a simple prompt like “adorable kitten princess” or whatever, then ask, “Hey, what was the prompt you passed to DALL-E 3 for that?” ChatGPT will give you back a greatly elaborated prompt, like the example just above, which you can then edit to your heart’s content and pass back in to the existing chat, a new chat, or a completely different image generator. It’s pretty fun!)

Certainly the image generator is doing something amazing in examples like this, but what has really progressed over the course of the dialog is the text it’s being presented with. That’s all occurring on the language side.

In robotics, researchers are introducing new generative AI applications by the day, not just for human-robot communications, but also for expanding the ability of robots, including humanoid robots, to understand and operate in the world. For instance, Google’s DeepMind unit has proposed vision-language-action models that enable robots to use knowledge from the web to guide actions, such as, to quote their paper, “figuring out which object to pick up for use as an improvised hammer (a rock), or which type of drink is best suited for someone who is tired (an energy drink)”.

Welcome to our world

At this point it should be clear that language gives the new AI ways to connect to much more of the world than comes to mind when we hear the words “text prediction” or “autocomplete”. But we still haven’t got to the bottom of how general this paradigm is.

Here’s the thing. Words run everything in our world, including us.

When we take a walk in the woods, it’s the sun and the trees that nourish us, but words had a lot to do with making the park, and the words of people like Henry David Thoreau had a lot to do with making our parents want to bring us up hiking. Words start wars, and words end them. If we get a handle on climate change, words will make it happen.

In Social Talk, the 17th essay in his 1974 book The Lives of a Cell, physician and essayist Lewis Thomas wrote:

Language is, like nest-building or hive-making, the universal and biologically specific activity of human beings. We engage in it communally, compulsively, and automatically. We cannot be human without it; if we were to be separated from it our minds would surely die, as surely as bees lost from the hive.

This is the ultimate reason, the deep reason, software that speaks our languages can remake the world. The ability of the new AI to use language doesn’t make it human, but as we saw in Part 1, it has deep reflections of humanity built in. It has deep abilities to express reflected human nature in language, and now we see it as a participant in our quintessential activity — not yet a full participant, but a substantial one, and more so all the time.

Remember Terminator, from the beginning of Part 1? We’re not there yet, but as suggested in the previous section, the new AI, in combination with other developments, is bringing us ever closer to the dream/nightmare (take your pick) of generally capable humanoid robots. But the deeper and insufficiently appreciated point is that by the time they’re here, they’ll be able to talk to us — for real. By then, they’ll be merely the most clearly personified form of something that’s deeply embedded everywhere we look.

Now what does it mean?

Thank you for sticking with me through the three parts of this introduction to the new AI! I hope it’s helpful as you try to sift wheat from chaff out there in media and marketing.

Now that we know what a Gargletwig is, so to speak, we can start thinking about what the new AI means for mortal human beings, and for the societies we live in. See you next time.

If you want more to read…

The kitten princess series in this post was inspired by this story in the New York Times (unlocked link). Not only is the story itself great, but it also includes a ton of fun and instructive links. I particularly enjoyed what happened when rationalist demigod Eliezer Yudkowsky pushed ChatGPT to make an image more and more “normal”.

Janelle Shane writes a fabulous AI humor blog that’s quite instructive too. It’s called AI Weirdness, and a lot of its content is free. Currently she’s writing a lot on the way ChatGPT communicates with DALL-E 3. Her blog is also the source of the immortal GPT-3 tries pickup lines.

Megan Garber, at The Atlantic, wrote about the profound nature of autocomplete in 2013! (Unlocked link.)

As far as I can tell, the AI in this story at Nature’s website isn’t generative AI (aka, in my lingo, “the new AI”), but this was too cool not to include.

If you want to give ImageFX a try…

Unless you have a ChatGPT Plus or Midjourney subscription, ImageFX is one of the best ways to get a taste of AI image generation.

Just head over to the ImageFX site at Google’s AI Test Kitchen. You’ll be asked to log in to Google, if you aren’t logged in already, and then you can immediately enter your first prompt.

Maybe even more than in text-to-text, the quality of results you get with any image generation model is really sensitive to the way you prompt. Try to describe what you want concretely and as fully as you can, and keep an eye on the cues ImageFX provides in the prompt window. I also recommend heading over to the Imagen home page just for the examples of simple prompts that get good results. Have fun!

If you want a worthy meditation…

Let’s give a little more thought to this passage, from the above section “Better” doesn’t begin to describe it:

Recall from Part 2 of this introduction that the combined knowledge base and algorithm used by a modern LLM like ChatGPT is neither created by, nor accessible to, us human beings. It‘s not in the neural network structure constructed by human programmers; it resides — somewhere and somehow — in the immense, inscrutable wall of numbers (weights) the model learned when it was trained.

We can’t rigorously describe what the model has internalized, but we know its training in text prediction has forced it to infer an astounding amount of real-world knowledge, human perspective, and cognitive (or cognition-like, if you must) sophistication, all of which is brought to bear every time it predicts one token.

The whole generative AI program has been built on the premise of scale: more training data, more parameters, better predictions. This is why LLMs are called large language models (and how it came to pass that there’s such a thing as a small LLM!)

Some authorities think there are limits to the power of scale, but if so, we haven’t hit them yet: bigger models with more parameters (weights in the wall of numbers) make better predictions.

What changes when we increase model size is only one thing: the wall of numbers, which we can surmise encodes more real-world knowledge, more human perspective, and a stronger cognitive (or pseudo-cognitive) “program”. And that change has an effect in only one place: where the model predicts a single token. Yet masters of language though we are, we can’t perceive improved quality at the token level, without looking ahead; we only see it as an attribute of an entire response, like the impressive advice GPT-3.5 gave our test-fearing student.

How does this work, and what does it say about human language and human cognition? It breaks my head, in a good way.

This article originally appeared in AI for Mortals under a Creative Commons BY-ND license. Some rights reserved.