The Future of AI for Mortals

AI for Mortals is a small blog, with a readership under 100, mostly my family and friends. I've never minded that it's small; you're the people I write it for, and I've never tried to promote it more widely. (That said, I'm delighted a small number of others have found your way here, and you're very welcome indeed!)

Through a strange combination of background, serendipity, and luck, I was already following the new AI from a kind of "insider-adjacent" perspective in 2022. (It's a great story actually, but one I don't have permission to tell. Maybe someday.) As I quickly learned, not only was the new AI doing things I - a supposedly well-informed lifelong gearhead - had never dreamed would happen in my lifetime, it was doing things I had never dreamed of at all.

It was as if a mission had come back from Kepler 186f with a party of walking, talking aliens. It was as if antique humanity had begun to use language all at one moment, and I had been there for it.

Even the people most deeply involved in creating the new AI were only just beginning to understand that what they were doing was one of the epic stories of human civilization: awesome, exhilarating, and terrifying, a great turning of the wheel.

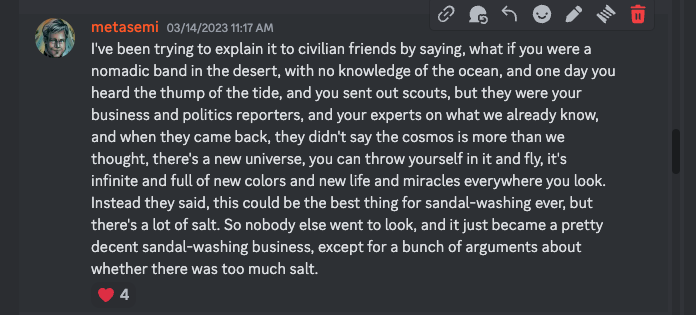

Then ChatGPT came along, and that became the lens through which everyone was introduced to the new AI. As the software industry's Next Big Thing. Here's something I wrote to a private forum at that time:

I watched in dismay as a tsunami of information and misinformation shocked, confused, and alarmed people I love without offering the slightest help toward appreciation of the wonders or understanding of the risks. Nor any clue about why, after decades of portrayal as a dud or a fantasy, AI was now immediately going to overturn the world.

No one was even trying to tell you the real story. Most people commenting in public didn't understand it themselves, and the few that did didn't seem to think it was a story ordinary people could, or would need to, or would even want to understand. But none of these are true!

This blog was born of my frustration watching people I love struggle to make sense of what you were hearing, and grief at the story you were being deprived of: the one that will be remembered in a thousand years as the story of our time.

I've been surprised and deeply moved by how many people were interested in AI for Mortals, and even more so by how many continue to read it in depth, hit me up with fascinating questions, and teach me things I didn't know about the topics discussed. And maybe I'm biased (ya think?), but I'm proud of the posts. I still point people to them as the best serious beginner's AI introduction I know of.

Alas, the last post was June 18th. Quite a few people have asked me when there's going to be another one, or whether I've stopped writing them, or (ouch) why I stopped writing them. Even a couple weeks ago, I was telling people that Robin and I have multi-week off-grid travel coming up in late October and running through November, and that I was determined to get another one or hopefully two posts out before we leave.

It's now clear that's not going to happen - my apologies to those of you I've told that it was. Then it will be the wonderful but notoriously unproductive month of December, with massive post-travel dig-out and catch-up added on top in our case. Realistically, the next time there could possibly be a new AI for Mortals post would be deep into January 2025.

Q. What's the future of a blog on the fastest-moving topic in human history, assuming it pushes out a new post once every six months?

A. It doesn't have one.

I kind of accept that answer. AI for Mortals, as currently constituted, is unsustainable.

But here's the thing. The need it was intended to meet is still there - even more so as the tech giants continue to cement their dominion over not only the AI story, but increasingly the evolution of the technology as well.

The new AI is not a parlor trick, and it's not a neat business opportunity. It's the next great unfolding of our world's quest to know itself. We cannot leave this to the "experts", especially the corporate ones, any more than we can ignore the weather report because we're not meteorologists. A hurricane is coming.

I know that sharing this story is what I'm supposed to be doing, and I'm determined to find a way to keep doing it, if not in a big way, then in a small one.

I'll be doing some serious soul-searching over the next months about how to resume a public voice on the topics we've been looking at here. Maybe that's a revival of AI for Mortals in a new form, maybe it's something different. Whatever it is, I'll make sure you know about it through the same channel where you're seeing this, whether that be on Medium, on metasemi.com, or on the ai-for-mortals Google group.

Onward!

But... Mike... isn't AI already, like, hitting a wall?

A lot of people think so, and a lot of people are saying so. I expect the drumbeat of such commentary to carry on well into 2025, if not beyond. But - trust me on this - the answer is no. No, it is not.

Right now I can't write the post that would really convince you of this, which is too bad, because it would be a good one! But here's a sketch of some key points...

The "hype cycle"

Courtesy of the analyst firm Gartner, tech insiders have a nifty way of talking about a technology's hype cycle. Right now, the first blockbuster application of the new AI - general-purpose chatbots - is just entering the hype cycle phase called the "trough of disillusionment", where it becomes clear that much of what has been touted about the new technology is empty hype.

And wow, I don't know if anything has ever generated as much empty hype as general-purpose chatbots like ChatGPT. But every technology goes through the trough, not just the flashes in the pan. What matters is not how much hype there is, but how much reality is left after the hype is blown away. In the case of the chatbots, there's a lot of reality that will remain after the hype is cleared. (Of course we'll get plenty more new hype to go with it!)

More importantly, the chatbots are just a tiny dot on the vast map of what the tech industry is now doing with the new AI, which in turn is a tiny dot on the vast map of underlying developments that continue to advance at a furious pace.

If you're of an age to remember the closing years of the 1990s, you might recall there was just a bit of hype floating around about a new (actually, newly buzzworthy) thing called "the internet". It made some calmer heads a little nuts. In 1998, future Nobel Prize winning economist Paul Krugman vented his frustrations thusly:

The growth of the Internet will slow drastically, as the flaw in 'Metcalfe's law' ... becomes apparent: most people have nothing to say to each other! By 2005 or so, it will become clear that the Internet's impact on the economy has been no greater than the fax machine's.

Being watchful for hype is important, but more hype doesn't imply less reality. Often it's exactly the opposite.

Scale is hitting a wall, AI isn't

Up to this point, gains in AI performance have been largely been driven by scale: bigger and bigger models that require more and more compute power to run. This, combined with rapidly expanding usage, has increased the economic costs and environmental impact of AI at an alarming rate. (In my view, the popular press has painted a seriously exaggerated picture of the climate impact, but the reality is scary enough.)

This makes a lot of people uneasy about AI's ability to continue making progress, or whether we should even want it to, but the truth is that everyone in the field has been aware for some time that the "just keep scaling" strategy is a dead man walking. I wrote in AI for Mortals #5 about the amazing multi-front progress researchers and industry are making, doing more with less and improving efficiency not just by increments, but in many cases orders of magnitude.

Your brain is proof that human-level general intelligence can run on about 20 watts of power. Of course, we have no reason to believe we'll be able to get AI to such a level of efficiency easily or quickly. But at the moment, we're heading in that direction at an impressive pace, and there's no end in sight so far.

This doesn't mean there isn't, or shouldn't, be a fight over the climate and water impacts of data centers (which is what people are worried about; AI is only one relatively small part of it). On the contrary, it's urgent to keep building awareness and pressure on this. What it does mean: it's not a hard binary between containing data center impacts and continuing to develop AI. We must, can, and will do both.

It's still not that smart, now is it?

Some pretty smart people still think AI will never live up to its seeming promise because now that we've had a good chance to play with it, we see that it still hallucinates, reasons poorly, needs to be carefully prompted, is easy to mislead, is always too sure of itself, can only emulate a hack writer instead of a gifted one, et cetera, et cetera.

These writers all know that five years ago, the smartest piece of software was as dumb as a stone, whereas today's LLMs are kinda sorta intelligent. I'm sure all would admit that going from "dumb as a lifeless stone" to, for example, "merely a self-trained hack writer" in five years is progress that would have struck every informed person as categorically impossible just a few years ago.

So they must have explanations for why they think further progress is somehow foreclosed, right? And they do. And that would be valid, if the explanations were. "Past performance does not guarantee future results", as investment firms are so fond of telling you.

But I've studied these explanations, and they don't hold up. That would be a long post all by itself, and you're going to have to decide whether to take my word for it, but let me give you an example of just one of these rationales, that of embodiment: the idea being that our human intelligence is an aspect of our existence as beings with individual memories, goals, relationships to the surrounding world, and status as actors within that world. The argument is that an LLM, such as a chatbot, doesn't have such an existence, and thus anything comparable to our intelligence is forever beyond it. There's true insight here, and the embodiment argument is widely considered a particularly compelling reason for skepticism about artificial general intelligence. But c'mon man! This is trivially refuted. You want an LLM to be embodied, put it in a robot. People are already doing that. Case closed.

For the last word, let's go to the brainy Swedes

As I write this, the 2024 Nobel prizes are being awarded.

On Tuesday, the Nobel committee announced the award for physics: to John J. Hopfield, a physicist, and Geoffrey E. Hinton, an AI researcher, "for foundational discoveries and inventions that enable machine learning with artificial neural networks". You can read their press release here.

Wednesday, the award for chemistry was split between David Baker, a biochemist, "for computational protein design", and Demis Hassabis and John M. Jumper, AI researchers, "for protein structure prediction". (The Hassabis/Jumper one was about Google DeepMind's AlphaFold 2. If you're following along ridiculously closely, you might recall that AI for Mortals #5 highlighted AlphaFold 3 as one of the interesting little events of May 2024. I said then that AlphaFold is "more than a breakthrough; it's a breakthrough factory", and that "people centuries from now may look back on this moment as one of the great turning points in scientific and medical history.") The committee's press release for the chemistry prize is here.

The Nobel prize committee has a history as provocateur, and I'm sure there will be some debate about the appropriateness of awarding both physics and chemistry to AI research!

But here's what I think they're trying to tell us: from here on out, AI is a core participant in our most fundamental investigations into the physical world and life itself. The next time you see a casual dismissal based on the idea that AI is about ChatGPT party tricks or one of the tech giants' attempts to sell you a phone, remember that there's a bigger picture. AI is the next great turning of the wheel in our corner of the life-universe's quest to know itself.

It isn't going anywhere but up.

This article originally appeared in AI for Mortals under a Creative Commons BY-ND license. Some rights reserved.